Level Up Your Data Game: 5 Must-Read Blogs You Can’t Miss in 2024

Staying ahead in the ever-evolving world of data and analytics means accessing the right insights and tools. On our platform, we’re committed to…

Read moreIt's been a year since the announcement of the dbt-flink-adapter, and the concept of enabling real-time analytics with dbt and Flink SQL is simply brilliant. The dbt-flink-adapter enables the construction of Flink SQL jobs using DBT models with declarative SQL and runs them via the Flink SQL Gateway. It's an excellent tool for code modularization, versioning and prototyping. You can learn more about how it works and how to use it here. The latest version 1.3.11 introduces a new feature that also facilitates the maintenance of Flink jobs' lifecycle in session clusters. This feature fills the gap between prototyping, testing, and deploying across multiple environments.

In this article, I'll explore the dbt-flink-adapter's pivotal role in transforming data streaming by integrating dbt's SQL models with Flink SQL. We'll delve into how this adapter improves analytics and streamlines the management of data job lifecycles, offering a more straightforward path from development to deployment. Discover the practical benefits and latest enhancements of the dbt-flink-adapter that can significantly improve your data operations and workflow efficiency.

When you type the command dbt run, the project is transformed into a SQL statement and sent to the Flink SQL Gateway via the Rest API. Consequently, a new job is created in session mode. Upon executing the same command again, a second instance of the job is created. Flink doesn't attempt to stop the job and create a new one; it's not within its responsibility.

The Flink SQL Client (starting from version 1.17) supports statements such as SHOW JOBS and STOP JOB. With this, you can list all jobs in the session cluster and stop any of them. If you need help identifying the job, the dbt-flink-adapter does this for you!

The dbt-flink-adapter handles two types of model materialized properties: view and table. The first one assists in organizing the code, running it simply creates a Flink temporal view. The latter, 'table', is responsible for triggering the job. Let's configure the 'materialized table' in the model.yml file:

version: 2

models:

- name: high_loan

config:

materialized: table

upgrade_mode: stateless

job_state: running

execution_config:

pipeline.name: high_loan

parallelism.default: 1

connector_properties:

topic: 'high-loan'You might notice some new properties in the configuration (bold values are default):

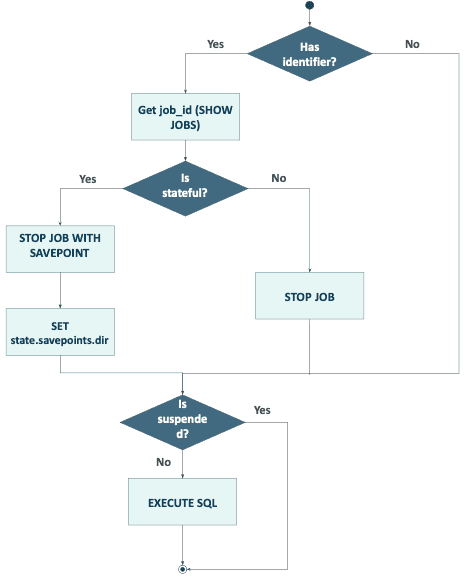

upgrade_mode (stateless, savepoint) - determines if the job should be stopped with savepoint.

job_state (running, suspended) - allows you to suspend/start the job.

execution_config - Flink job configuration. You can set any supported SQL Client key-value property.

Please note that you can configure default values for all your models in dbt_project.yml and override it with model.yml.

Once you set the pipeline.name, the dbt-flink-adapter will attempt to identify the job and stop it. If you set upgrade_mode, the job will be stopped with a savepoint. The created savepoint will then be used to restore the new job's state.

Additionally, you can suspend the job by setting job_state to suspended.

The logic for upgrading the job is illustrated in the diagram below.

The dbt-flink-adapter utilizes the property execution_config.state.savepoints.dir during job upgrades (the model's value will be overwritten if the job is running). If you wish to start the job with a specific savepoint, follow these steps:

The dbt adapter executes two types of statements: CREATE VIEW and CREATE TABLE AS. Both structures are created in Flink memory, dropped, and then recreated during the job upgrade.

Dbt is an indispensable assistant if you need automated deployment tests. All that you need to do is execute the dbt run & dbt test in your CI/CD. Streaming is not an issue!

Data lineage? No problem. Dbt can generate Directed Acyclic Graphs (DAGs) and documentation that illustrate the transformations between sources and models. Additionally, Dbt can add metadata fields to your data. Need more detailed data lineage capabilities such as column-level or business logic-level lineage? Dbt can be seamlessly integrated with third-party tools like Collibra, Datafold, Altan, and more.

Dbt bridges the gap between technical and non-technical worlds. All you need to know is SQL and YAML to write and release ETLs. Furthermore, with the dbt-flink-adapter, you can create streaming pipelines in the same way!

This is an initial attempt at managing job lifecycles with dbt and Flink SQL Gateway. While it's lightweight and stateless, it lacks robustness. There's a risk of losing job progress if deployment fails between internal steps, such as stopping with a savepoint and starting the job again. Additionally, the dbt-flink-adapter won’t assist if your Flink Session cluster encounters issues. There's no mechanism to automatically restore jobs from the latest savepoint.

Furthermore, working with Flink SQL has its challenges. Upgrading stateful jobs can be tricky and sometimes not feasible. For instance, Flink doesn’t allow setting the operator’s UID via SQL. Modifying the SQL statement handled by or chained with the stateful operator can lead to failure.

There are some limitations according to the stateless nature of dbt-adapter and stateful character of Flink jobs. Job won’t be restored from the latest savepoint if you suspend it and go back to running in the next step. This and the other limitations can be solved by storing savepoint paths in an external database.

Dbt-flink-adapter allows you to start your adventure with Flink SQL and easily build and deploy your pipelines. With the new feature you can use it with your CI/CD pipelines and manage a jobs’ lifecycle on multiple environments. Starting streaming has never been easier!

If you have questions or need a deeper understanding, sign up for a free consultation with our experts, and don’t forget to subscribe to our newsletter for more updates.

Staying ahead in the ever-evolving world of data and analytics means accessing the right insights and tools. On our platform, we’re committed to…

Read moreIf you are looking at Nifi to help you in your data ingestions pipeline, there might be an interesting alternative. Let’s assume we want to simply…

Read moreNowadays digital marketing is a competitive business and it’s easy to tell that we are way past the point when a catchy slogan or shiny banner would…

Read moreStreaming analytics is no longer just a buzzword—it’s a must-have for modern businesses dealing with dynamic, real-time data. Apache Flink has emerged…

Read moreDynamoDB is a fully-managed NoSQL key-value database which delivers single-digit performance at any scale. However, to achieve this kind of…

Read moreTime flies extremely fast and we are ready to summarize our achievements in 2022. Last year we continued our previous knowledge-sharing actions and…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?